zbai at cs.rochester.edu

(585) 275-7758

3007 Wegmans Hall

Dept. of Computer Science

University of Rochester

Zhen Bai is an Assistant Professor leading the inter.play lab, and also co-directing the ROCHCI group in the Department of Computer Science, University of Rochester. She is an affiliated faculty member of the Goergen Institute for Data Science and Artificial Intelligence.

Zhen's research focuses on exploring how to cultivate a lifelong playground - one that offers playful, spontaneous and collaborative support for learning and communication, grows with children throughout their lives, and helps them become AI-responsible future citizens. Zhen creates embodied and intelligent user interfaces such as Augmented and Virtual Reality (AR/VR), Tangible User Interface (TUI), and intelligent agents to support STEM (e.g., AI literacy, scientific inquiry) and social-emotional learning (e.g., social understanding, curiosity, communication) for children with diverse needs and backgrounds (e.g., autism, deaf and hard of hearing).

Zhen’s work has been recognized by the NSF CAREER award, the Google Inclusion Research Award, the Asaro Biggar family fellowship, and several Best Paper honorable mention awards (AIED’25, AIED’22, IDC’22, EC-TEL’17, ISMAR’13). Zhen recently served for CHI (‘24-now) (Associate Committee), ISMAR’24 (Steering Committee), and IDC (’23,’26) (Conference Committee).

Zhen completed her PhD degree in Computer Science at the Graphics & Interaction Group (Rainbow) at University of Cambridge, advised by Prof. Alan Blackwell. Before joining University of Rochester, she was a post-doctoral fellow at Carnegie Mellon University Human-Computer Interaction Institute (HCII) and Language Technology Institute (LTI), working with Prof. Justine Cassell and Prof. Jessica Hammer.

For prospective students interested in PhD research opportunities, please find details here.

Research Highlights

Selected Projects

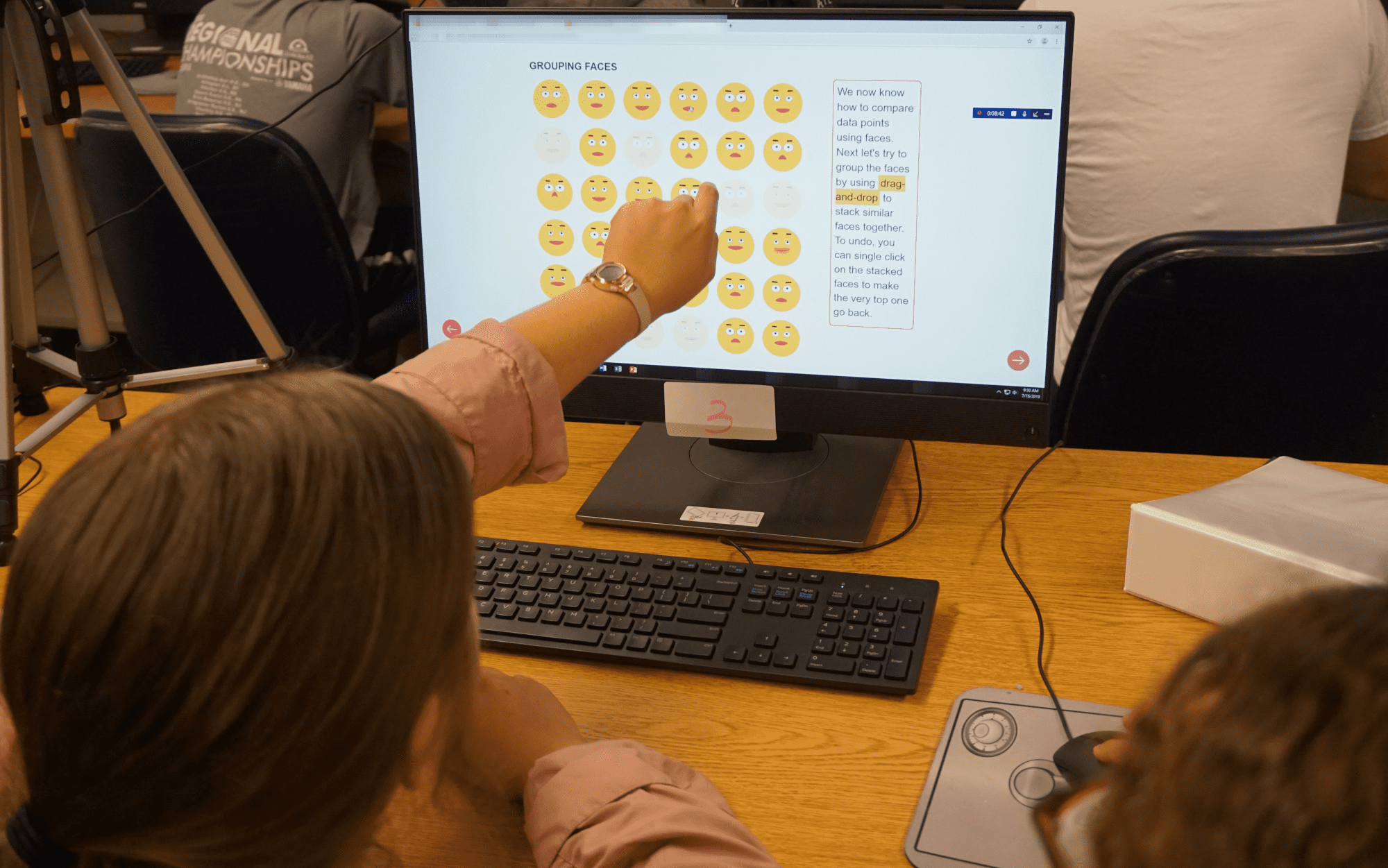

How to Integrate Accessible ML Learning Experiences in K-12 STEM learning?

Selected papers: [IDC'20] [AIED'21![]() ] [ijAIED'22] [ISLS'24] [AIED'25

] [ijAIED'22] [ISLS'24] [AIED'25![]() ]

]

There is an increasing need to prepare young learners to be Artificial Intelligence (AI) capable for the future workforce and everyday life. Machine Learning (ML), as an integral subfield of AI, has become the new engine that revolutionizes practices of knowledge discovery. We developed playful learning environments - SmileyCluster, SmileyDiscovery, ML4Inq - that utilize glyph-based data visualization and exploratory visual comparison techniques to help K-12 students and teachers with limited math and computing skills to obtain basic knowledge of k-means clustering, k-NN classification and regression. Findings in a pre-college summer school show the effectiveness of the proposed learning environments in supporting students to engage in authentic learning activities that combine learning key ML concepts such as multi-dimensional feature space and similarity comparison, and using ML as a new knowledge discovery tool to explore STEM subjects through data-driven scientific inquiries.

Can embodied learning support K-12 students to grasp underlying concepts of AI technologies?

Selected papers: [ISLS'24] [IDC'24] [CHI'25] [ISLS'25]

Most K-12 AI education treats AI as a “black box”, focusing on how to use it rather than how it works. Without seeing the inner workings, children risk developing misconceptions, often overestimating and over-trusting AI. Once misunderstandings take root, they can be hard to change. This work challenges this conventional approach and launches a new research agenda to create innovative learning technologies that demystify the inner workings of AI. We focus on the fundamental building blocks of AI that underpin machine learning (ML) from basic algorithms like k-means clustering, to recommendation systems and embedding-based deep neural networks. We explore the design space of embodied metaphors that guide the design of future learning technologies that bridge abstract and complex AI concepts with familiar sensorimotor and analogical experiences for novices.

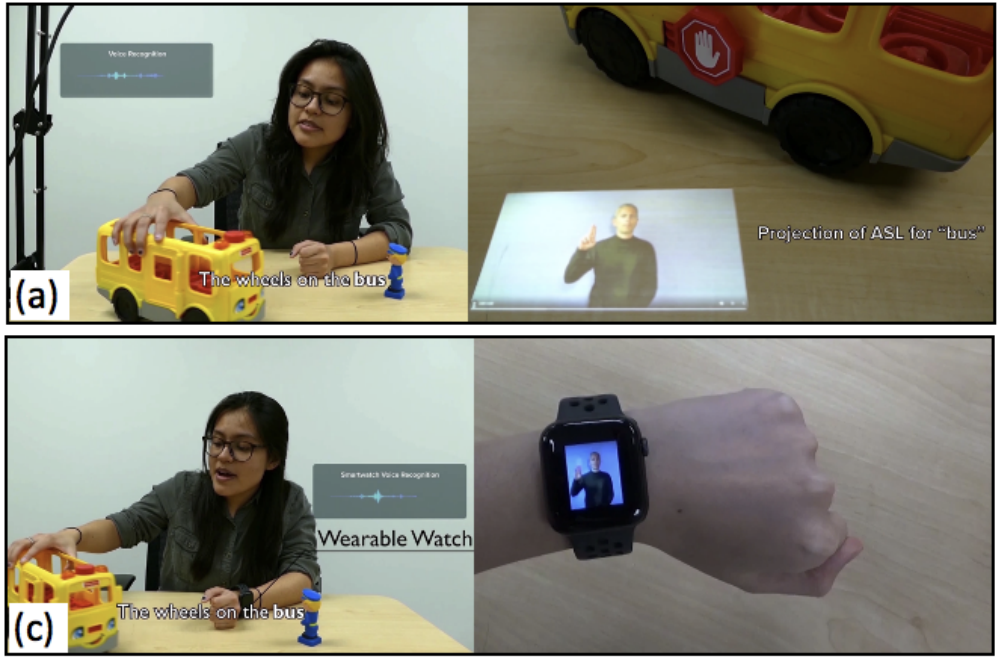

How to Support Non-Intrusive Communication between Deaf Child and Hearing Parents

Selected papers: [ISMAR'19] [ASSETS'22] [ASSETS'23] [IDC'25![]() ] [ASSETS'25 (to appear)]

] [ASSETS'25 (to appear)]

More than 90% percent of Deaf and Hard of Hearing (DHH) infants in the US are born to hearing parents. They are at severe risk of language deprivation, which may lead to life-long impact on linguistic, cognitive and socio-emotional development. In this project, we design and develop AR/VR and AI-mediated communication technologies that aims to support context-aware, non-intrusive and culturally-relevant parent-child interaction and collaborative learning of American Sign Language (ASL). The proposed prototype enables empirical studies to collect in-depth design critiques and usability evaluation from domain experts, novice ASL learners, and hearing parents with DHH children.

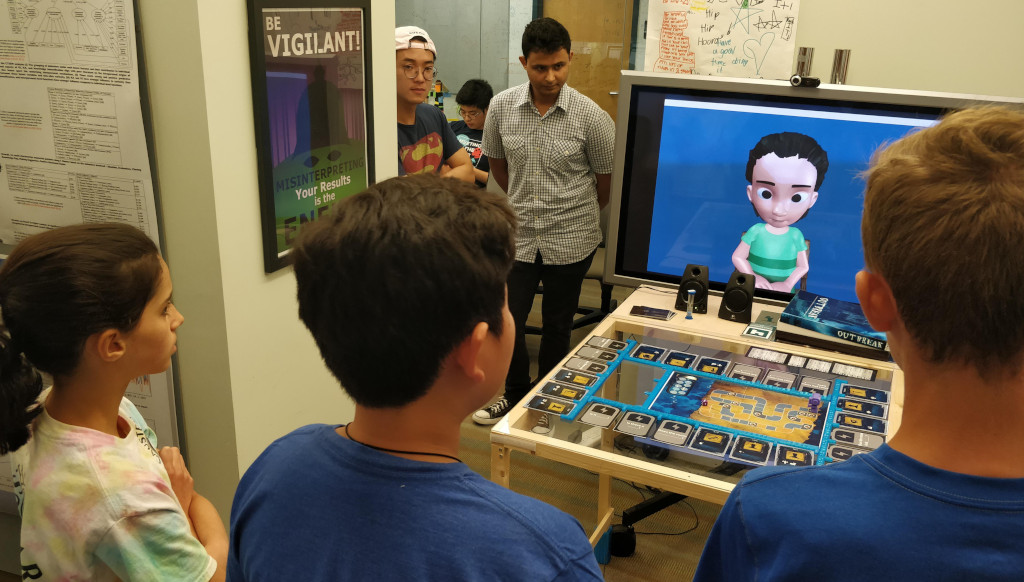

Can a Child-like Virtual Peer Elicit Curiosity in Small Group Learning?

[IVA'18]

We developed an intelligent virtual child as a peer collaborator to elicit curiosity in a multiparty educational game called Outbreak with children in 5th and 6th grade. We applied a child-centered data-driven approach to design a virtual child who is age appropriate, gender and race ambiguous, and demonstrates co-equal intelligence and behaviors to the target child group. Being an equal peer is essential to elicit cognitive dissonance to evoke curiosity as children tend to challenge and compare each other’s ideas but may simply accept adults’ ideas due to their high knowledge authority.

Can Social Interaction Foster Curiosity?

Selected papers: [EC-TEL'17a![]() ] [ECTEL-17b] [AIED'18]

] [ECTEL-17b] [AIED'18]

Curiosity is an intrinsic motivation for knowledge seeking and a vital socio-emotional learning skill that promotes academic performance and lifelong learning. Social interaction plays an important role in learning, but the social account of curiosity is largely unexplored. We developed a comprehensive theoretical framework of curiosity in social contexts using a theory-driven approach based on psychology, learning sciences and group dynamics, and a data-driven approach based on empirical observation of small-group science activity in the lab, STEM classrooms and informal learning environments for children aged 9-14 years old from underrepresented groups in STEM education. Furthermore, we developed a computational model, using sequential behavior pattern mining, that can predict the dynamics of curiosity including instantaneous changes in individual curiosity and convergence of curiosity across group members

Can AR Storytelling Enhance Theory of Mind? [CHI'15]

Social play with imaginary characters and other playmates helps children develop a key socio-cognitive ability called theory of mind, which enables them to understand their own and other people's thoughts and feelings. In this project, I developed a collaborative Augmented Reality storytelling system that supports divergent thinking, emotion reasoning and communication through enhanced emotion and pretense enactment.

Can Pretense be Externalized through an AR Looking Glass?

Selected papers: [ISMAR'13![]() ] [TVCG'15]

] [TVCG'15]

Children with autism spectrum condition often experience difficulty in engaging in pretend play, which is a common childhood activity that scaffolds key cognitive and social development such as language, social reasoning and communication. In this project, I developed the first Augmented Reality system that supports preschool children diagnosed with high functioning autism to conceptualize symbolic thinking and mental flexibility in a visual and tangible way.